Minutes before our first customer demo, a sensor mount on our retrofitted excavator snapped, our computers suddenly lost connection to the Wi-Fi, and the electrical system began faulting. I did not know what to expect being employee number four at construction robotics startup, but climbing on ladders in the Texas heat to fix a broken bracket I designed was not what I envisioned!

However, by the end of my internship at TerraFirma, I had worked on tasks ranging from computer vision to remote control of giant construction vehicles. It was an intense three months as I tried to maximize my rate of learning and below are some of the projects I worked on.

Project : The Totem

An important goal for us was for one construction operator to be able to control multiple construction vehicles simultaneously and remotely. For a construction worker to adopt the solution the control interface needed to be intuitive while also doubling their productivity.

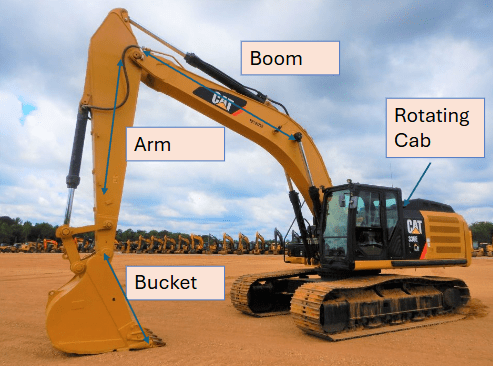

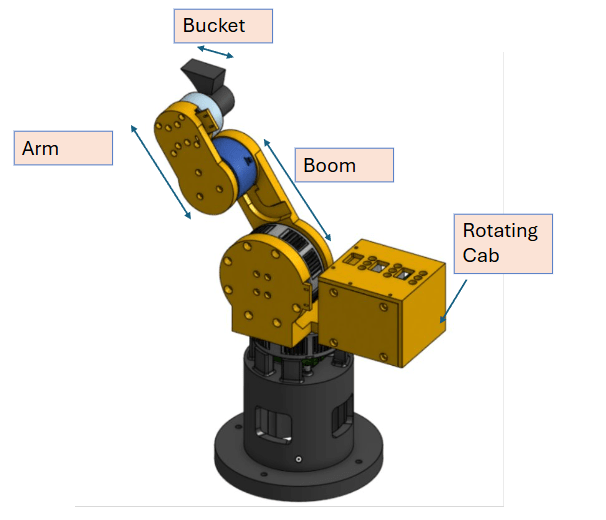

The first prototype of this control interface I developed was The Totem – a 4-dof miniature robotic arm designed to remotely control an excavator. Our pursuit of simplicity led us to think about how a child would be able to control a construction machine, and the answer was a toy. The Totem was a scaled-down and simplified toy excavator.

Each joint in the Totem was Quasi Direct Driven and the motor selection was subject to the following constraints:

- Packaging: The arm needed to be small enough for the operator to comfortably manipulate the bucket while also receiving sufficient force feedback. Since all joints were serially actuated, the weight of the motors was also important to consider.

- Torque: To simulate the feeling of driving a real excavator, the control interface needed to provide force feedback to the operator based on the excavator’s movements. The way we achieved this was to command motor torque proportional to the difference in the joint position of the Totem and the real excavator. There weren’t any hard metrics or calculations that drove this torque requirement, instead, we set up an experiment where we replicated the motion of the arm and used a force meter to determine the force that “felt” right.

- Back Drivability: Since the operator would be moving the joints of the Totem with their hand, the motor needed to be easily back drivable without providing too much resistance.

- Temperature limits of the motor could not be exceeded.

- Field Oriented Control compatibility

Brushless DC motors with a gear ratio below 10:1 were chosen based on the requirements above. With the motors selected and an initial design finished, it was time to build and assemble this prototype.

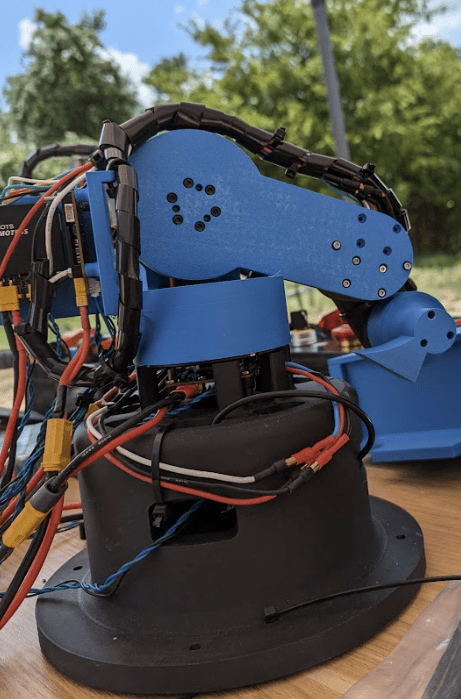

The process

Speed of iteration is essential at a scrappy start-up, and I was expected to design, build, and have a workable prototype within a week. All parts were 3d printed using PLA to save time, and we used open-source encoders from MJBots. Soldering all wires, connectors, and boards was the most tedious part of the assembly process, and my shaky hands did not help! I also calibrated and configured our motors to prepare them for use with our control system.

Mistakes

Cable management was one of the biggest flaws of this design since I did not have enough time to plan efficient cable routes, but that was a fix for a later iteration. This also had a negative impact on the ergonomics because it made the system bulkier and more difficult to manipulate.

The Results:

Following days of debugging, fine tuning PID gains, and resolving other issues with the operator station I had the chance to control two excavators simultaneously. The video below shows the operator’s experience!

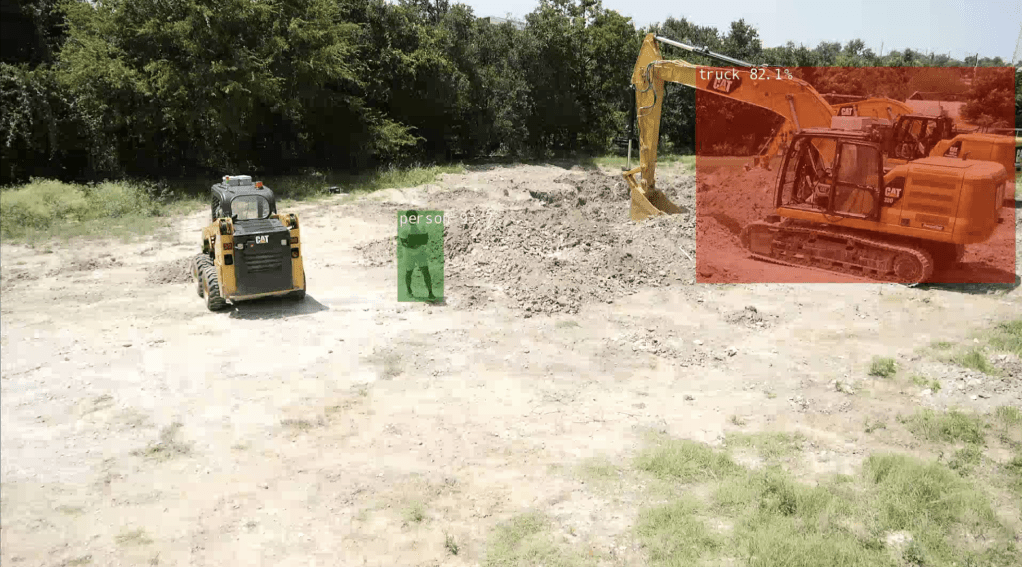

Project: Live Person detection

Safety during the operation of these powerful construction vehicles was paramount to our customers who had never seen an excavator being driven without a person inside the vehicle. I was responsible for ensuring that our robots would not be operational if a human were in proximity. Our safety system was robust, but it relied on a human operator always paying attention to the construction site with an e-stop box close by. To increase the flexibility of the system I implemented a live object detection system that would turn off all vehicles if a human was detected in the camera feeds.

Having never worked with computer vision before, I tested pre-trained object detection models on different microcontrollers. I started with a light model from YOLO on the Raspberry Pi and to accommodate for poor frame rates I experimented with a Coral TPU accelerator for speeding up inference, however, this had insufficient performance.

I upgraded to a Jetson nano and used SSD mobile net V2 which is a model trained to detect a variety of classes while having a good balance between detection speed and accuracy. The Jetson’s improved inference speeds were sufficient but I still had to solve the problem of being able to remotely view a live stream with the detection overlayed.

With multiple cameras already set up for the operator to have different views while remotely controlling machines, the challenge was to stream the camera feed on any device of the user’s choice. I used RTSP to stream live object detection from the Camera to multiple devices and below are the results.

https://youtube.com/shorts/b19Gysvpc3E : This video shows an early test of the functionality of our shutdown system.